The demand for outdoor autonomy isn’t a far-future concept; it’s happening right now. The first major trend we identified is the “indoor-to-outdoor” market. This involves for example operations that start inside a manufacturing plant and must end outside in a storage yard or similar. Think of steel fabrication, pipe manufacturing, or construction materials. A product is made indoors, but it’s too large or rugged to be stored there. It needs to be autonomously picked up, driven out of the building, and placed in a specific rack in an outdoor yard, all in one seamless operation.

Beyond that, we see massive potential in several key sectors such as:

- Ports and Terminals: Automating the movement of straddle cranes and container handlers is a 24/7 challenge that requires millimeter-level precision in harsh, dynamic, and wide-open environments. Read our blog: Solution to ports

- Ground Support Equipment (GSE): Airports are a buzzing with continuous activity. Automating baggage tractors, cargo loaders, and other support vehicles navigating on complex tarmac environments are prime targets for efficiency and safety gains. Read our blog: Airport visions

- Construction: While full-scale site automation is complex, the technology is already used for example when making roads. Companies like Caterpillar and John Deere have invested heavily in telematics and automation, and the need to move materials autonomously on large commercial sites is growing rapidly. Read more about our activities from here.

- Mining: This is one of the original use cases. Our partners at Navitec Systems, for instance, have been working with mining companies on autonomous mining vehicles since 1998 and GIM is currently also providing solutions for some of the flagship companies in this field and participating in a Futura project. These machines operate in some of the most difficult conditions imaginable, entirely underground.

- Other key areas we’re watching include agriculture, last-mile delivery, and military or surveillance applications.

The problem? All these applications share a common set of brutal operational challenges.

A Powerful Partnership: GIM Robotics + Navitec Systems’ Joint SW Stack

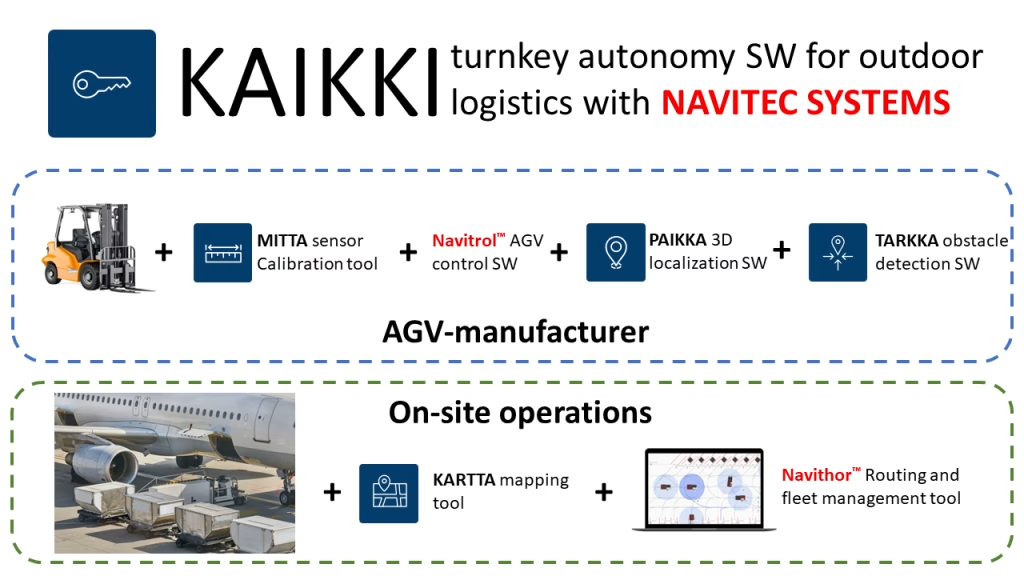

To tackle a problem this big, you need a comprehensive solution. That’s why GIM and Navitec, both headquartered in Finland and sharing a formidable talent pool of nearly 100 robotics engineers, have partnered. Together, we offer a complete, end-to-end software stack for any outdoor autonomous vehicle.

Think of it in three layers:

- Vehicle Control (Navitec): At the bottom layer, Navitec’s software provides the “drive-by-wire” vehicle control and navigation. It tells the wheels how to turn and the motors how to spin to execute a path.

- Localization & Perception (GIM): This is the middle layer, and it’s GIM’s core expertise. This is the “brain” that answers to the two most critical questions: “Where am I?“ and “What is around me?” We provide the vehicle with its position in the world, accurate down to the centimetre level.

- Fleet Management (Navitec): At the top layer, Navitec provides the “air traffic control” system. This fleet manager coordinates all the vehicles, assigns tasks (with support for standards like VDA 5050), and even integrates manual vehicles to prevent collisions and create a holistic site-wide solution.

Together, this partnership delivers what the market demands: an infrastructure-free, all-weather, and truly robust autonomy solution, KAIKKI, that can be deployed on virtually any vehicle type, from a 500 kg tugger to a 500-ton crane. See the concept diagram below.

The Core Challenge: Why Outdoor Localization is So Hard

The key technical hurdles that make outdoor autonomy so much more difficult than its indoor counterpart deserve to be explained:

1. The Terrain

Unlike a flat warehouse floor, the outdoors is rough. Vehicles must handle uneven ground, steep slopes, mud, and gravel. With an autonomous truck, the vehicle’s cabin and sensors can pitch and roll dramatically when compared to the chassis. This makes simple wheel odometry (tracking how far the wheels have turned) extremely unreliable. The vehicle thinks it has moved two meters forward, but in reality, it just climbed a 1-meter steep slope.

2. The Weather

This is the most obvious challenge, and it’s one we live and breathe in our “backyard” test facility here in Finland. Our solution must work flawlessly in:

- Snow: Which covers the ground, blocks sensors, and creates false readings.

- Rain: Heavy downpours creates reflections and absorbs light, making it hard for sensors to “see.”

- Fog: Which is like a wall to many sensors, severely limiting their range.

- Dust: Common in mining and construction, it can completely blind a sensor.

3. The Environment (Dynamic & Sparse)

This is the most complex challenge.

First, GNSS (like GPS) is not a silver bullet. While RTK-GNSS can provide centimetre-level accuracy, it’s not 100% reliable. In the “urban canyons” of a port (stacked containers) or a manufacturing plant (tall buildings), the signal reflects off surfaces (multipathing) or disappears entirely. This is especially true during the critical indoor-to-outdoor transition, where the vehicle is blind until it can acquire a stable satellite lock. You also must account for signal jamming, spoofing, and even solar flares, which can take a whole fleet offline. You cannot build a mission-critical industrial solution that relies only on GNSS.

Second, the environment is dynamic. For example in a busy indoor warehouse, your map from yesterday might be useless today. Pallets are moved, trucks are parked, and temporary obstacles appear. If your localization system relies on “seeing” specific pallet stacks that are no longer there, it will fail.

GIM’s Solution: 3D LiDAR and Normal Distribution Transform (NDT)

So, how do you solve this? The answer is robust sensor fusion. Our system is hardware-agnostic, but our core localization stack is built around high-performance 3D LiDAR.

Why 3D LiDAR?

- 2D LiDAR (common indoors) struggles outdoors because it sees very few features at ground level.

- Cameras are promising but are severely affected by lighting changes (day/night, sun glare) and weather.

- Radar is great in bad weather but lacks the precision and resolution for accurate localization.

3D LiDAR gives us a rich, 360-degree, high-resolution point cloud of the environment in all conditions. But processing hundreds of thousands of points per second is computationally massive. This is where GIM’s key intellectual property comes in: Normal Distribution Transform (NDT).

Instead of trying to match 100,000 individual points to a map, our NDT algorithm groups these points into 3D cubes, or “voxels.” It then represents all the points inside that voxel not as individual points, but as a single, statistical model – a 3D ellipsoid.

This has two massive benefits:

- Extreme Efficiency: We reduce a massive point cloud into a much smaller, lighter set of statistical data. Autonomous cars often need a “suitcase of NVIDIA GPUs” to run. Our NDS-based solution can run on a simple, low-cost industrial PC.

- Unmatched Robustness: Our system is built for dynamic environments. When we map a site, our algorithm identifies which parts are static (buildings, ceilings, permanent racks, etc.) and which are dynamic (e.g., pallets, parked vehicles). It then learns to trust the static parts and ignore the dynamic ones. When that pallet stack disappears, our system doesn’t get lost because it was never using that pallet for localization in the first place.

This is a key distinction from simple SLAM (Simultaneous Localization and Mapping). We use SLAM once to build the initial map. After that, the system runs in pure localization mode, referencing that stable, reliable map. This prevents map “pollution” and drift, ensuring long-term stability with a stop precision of plus-or-minus 1 to 2 centimetres. Read more from our Blog Using SLAM Wisely.

From Sandbox to Solution: Real-World Autonomy in Action

This technology isn’t just theory; it’s deployed in the field. There are plenty of real-world applications that prove the power of this approach, such as:

- Sandvik (Mining): These massive, autonomous mining vehicles navigate complex, dark, and dusty underground tunnels – a prime example of a GNSS-denied environment where map-based localization excels.

- Technacar (Airport GSE): A joint pilot at Helsinki Airport showed an autonomous baggage tugger navigating the tarmac, proving the solution’s viability for the complex logistics of a busy airport. See a short video.

- Trombia (Street Sweepers): The outstanding autonomous street sweeper GIM Robotics has helped to develop, the Trombia Free, is a perfect showcase. It operates entirely on our 3D localization stack. It needs no GNSS, allowing it to work flawlessly in urban canyons, tunnels, and under bridges. It’s fully electric, efficient, and operates in all weather, day or night. See the system in action.

The future of autonomy is outdoors. It’s complex, it’s challenging, but with the right sensor fusion technology, it’s already being solved.

Interested in learning how GIM Robotics’ 3D localization software can power your autonomous outdoor vehicles?

Contact our team today.

The above blog text is based on the AGV Network webinar, October 22th, 2025:

Our CTO and co-founder, Dr. Jose Luis Peralta, recently joined SVP Global Business Development Mr. Peter Secor from Navitec Systems for a deep-dive technical webinar hosted by AGV Network. Together, they explored the emerging markets for outdoor autonomy, the tough problems that have held the industry back, and the powerful, all-weather solution GIM Robotics and Navitec Systems have co-developed to solve them. You can see the recording below.